Introduction to Quest#

Quest is Northwestern’s primary computing cluster for research. Quest comprises multiple types of resources that users can interact with in different ways.

The foundation of Quest is a high-performance computing (HPC) cluster with both traditional compute nodes and GPU nodes. Users can submit computational jobs to Quest ranging from those that run for a few minutes using a single compute core to jobs that run for hours and use multiple full nodes with dozens of compute cores each. Quest contains both General Access resources that are available for all Northwestern researchers to use for free and Priority Access resources that have been purchased by research teams with extensive or specialized resource needs.

Quest also supports interactive computing for researchers who are iteratively developing code or analyzing data.

Quest Overview#

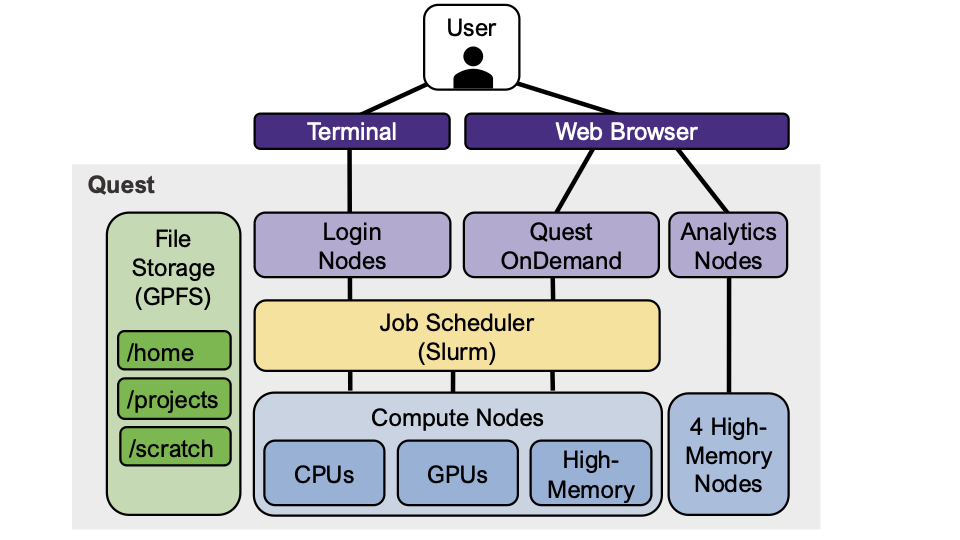

Users connect to Quest from their personal computer via one of three methods:

Primary Connection Method: An SSH (secure shell) connection via a terminal program that connects to the login nodes.

Quest OnDemand in a web browser, to submit batch and interactive computational jobs to the job scheduling system (Slurm).

Quest Analytics Nodes in a web browser, to work interactively with R or Python in sessions requiring small to moderate compute resources from a limited set of nodes.

After connecting to Quest, users have access to compute resources. The login nodes and Quest OnDemand provide access to Quest’s hundreds of compute nodes through a job scheduling system called Slurm. Users submit requests for compute resources to Slurm, and Slurm manages the requests from users and runs submitted jobs when the resources are available. Users logging in through the Quest Analytics Nodes will be connected to one of four high-memory nodes, the resources of which are shared by multiple users and cannot be reserved.

Quest’s file storage system can be accessed from all Quest resources. The file storage system is a general parallel file system (GPFS) that provides high-performance connections to the compute nodes. Quest has three different storage locations or partitions optimized for different uses: /home, /projects, and /scratch. Learn more about when to use each storage location in the Quest User Guide.

A large selection of open-source software, and some licensed software, is available centrally on Quest; see the Quest software list and and learn how to use software modules. Users can also install software and software packages such as R and Python packages.

Getting Started#

Tip

To use Quest, you will need an account with an allocation of computational and storage resources. Learn more and apply here .

Your next steps depend on what type of work you plan to do on Quest.

Do you primarily work interactively with Python or R? Are your computational needs modest (< 8 cores, < 100GB of RAM)?

You may want to start by working on the Quest Analytics Nodes:

Learn about Quest’s different storage systems

Learn how to transfer files to and from Quest

Read the overview of the Analytics Nodes and tips for using them effectively

Start working on the Analytics Nodes

Will you be using the Genomics Compute Cluster (GCC)?

See the Genomics Compute Cluster Quick Start guide. The required GCC orientation will also help you get started using Quest and the GCC resources effectively.

Are you an experienced HPC user?

Browse the Quest User Guide for key information needed to use Quest

Find the software modules you need

If you run interactive jobs, consider using Quest OnDemand

Are you a new HPC user?

Review the key terms below that might be new to you

Learn about working in a Bash shell from the command line through one of our recommended resources

Read through the Quest User Guide

Review any tips for using your preferred software on Quest

Review HPC Best Practices to help you use Quest effectively

Try submitting your first job on Quest

Note: If you are planning to work through interactive jobs that use significant computational resources or software other than R or Python: learn about Quest OnDemand.

Tip

Not sure where to start? Schedule a consultation with the Research Computing Services team to discuss your computational needs and workflow.

Key Terms#

As you get started with Quest and work your way through the documentation and Quest User Guide, you may encounter some new terms.

Term |

Meaning |

|---|---|

Node |

A computer. Quest’s nodes have multiple cores and gigabytes of memory (RAM). |

Core |

A processing unit that most effectively handles a single computational process. All of the nodes on Quest have multiple cores. |

Job |

A computational task, usually defined through a script. Within the context of an HPC cluster, a job often refers more specifically to a script formally submitted to a job scheduling system specifying required computational and software resources. |

Batch job |

A job that runs without human intervention once it starts. |

Interactive job |

A computational job or session where the user enters commands, waits for output, and then enters additional commands iteratively. |

Home directory |

A user-specific directory in the file system of a multi-user system, often used as a user’s default directory. On Quest, this is |

Allocation |

A specific set of computing and/or storage resources that you have access to on a system. Allocations are usually granted for a specific period of time. To use Quest, you need an active allocation. |

General Access |

Quest resources that are available to Northwestern researchers at no cost. |

Priority Access |

Quest resources that contain compute or storage resources that have been purchased by a specific research group for their dedicated use. |

Linux |

The type of operating system Quest runs, in contrast to Windows or MacOS. |

RHEL8 |

Red Hat Enterprise Linux, version 8. The specific version of Linux that Quest uses. |

SSH |

Secure shell. A specific protocol that allows you to connect to a remote node or server from another computer such as your laptop. |

Login node |

When you connect to Quest via SSH, you connect to a login node. Login nodes are used for small tasks such as managing files, testing code, installing software, and submitting jobs to Slurm. Users should avoid running computationally-intensive tasks directly on the login nodes. |

Slurm |

Quest’s job scheduling software. Users submit jobs to Slurm and it allocates Quest’s resources and runs the submitted jobs. |

Parallelization |

Dividing up a computational task into smaller subtasks that are run simultaneously on multiple cores. |

Scratch |

Temporary storage with an automatic deletion process. Best used for intermediate outputs or files that will be moved to a different storage system. |

Cluster |

A collection of connected nodes, usually with attached storage. Quest is an HPC cluster. |

HPC |

High-performance computing. A computing cluster that provides multiple nodes that can be used simultaneously to run programs that are too large for a single node. An HPC cluster may also be referred to as a supercomputer. |

Command line |

Working in a terminal program where the user types commands at a command prompt, often without a mouse. Learn more from resources in our Command Line Resource Guide. |

Bash |

An interactive command interpreter (program or shell) and programming language. When you’re working at the command line on Quest, you’re using Bash commands and working in a Bash shell. Job submission scripts for Slurm are written in Bash. |

GUI |

Graphical User Interface. A piece of software with buttons, icons, dropdown menus, and other components that you can interact with via a mouse or touchscreen, in contrast to a command line program. |

Partition |

In the context of Quest, you choose the partition to submit a Slurm job to depending on the time the job needs to run and the specific compute resources you want to use (ex. GPUs or purchased resources). More generally, it refers to a collection of specific compute resources on an HPC cluster. |

Generation |

Quest compute nodes have been installed in multiple batches over time. Different generations of compute nodes may have different hardware specifications or architectures. See Quest specifications for information on Quest’s generations. |

Infiniband |

The high-speed network that connects Quest’s storage and nodes together. |

RCDS |

Research Computing and Data Services; the team within Northwestern IT that owns and provides user support for Quest. The Research Computing Infrastructure team, which is also part of Northwestern IT, also supports Quest’s infrastructure. |