MATLAB#

MATLAB can be run on Quest using a single core (similar to how you may run it on a laptop), multiple cores on the same node, or with MPI support to utilize multiple nodes. Which option is right for you will depend on the type of MATLAB code you are running and if parallelization (either explicit through the use of parfor or implicit through MATLAB’s internal multithreading) will speed up your operations.

Prefer Videos?

Try watching the Using MATLAB on Quest workshop recording.

Single Core Jobs#

In many situations, it will be most beneficial to run a single core MATLAB job.

By default, MATLAB uses multithreading and tries to use all of the cores available on the computer on which it is running. On your laptop, this does not create an issue. On Quest, this will result in MATLAB attempting to use more cores on a compute node than you may have requested, which will result in your job being terminated.

If you are not explicitly parallelizing your code, you need to tell MATLAB to only use a single core by passing the command line argument -singleCompThread when starting MATLAB.

Below we provide an example submission script called example_submit.sh and an example MATLAB script called simple.m.

example_submit.sh

#!/bin/bash

#SBATCH --account=w10001 ## YOUR SLURM ACCOUNT pXXXX or bXXXX

#SBATCH --partition=w10001 ### PARTITION (buyin, short, normal, w10001, etc)

#SBATCH --nodes=1 ## Never need to change this

#SBATCH --ntasks-per-node=1 ## Never need to change this

#SBATCH --time=00:10:00 ## how long does this need to run (remember different partitions have restrictions on this param)

#SBATCH --mem=4G ## how much RAM you need per computer (this effects your FairShare score so be careful to not ask for more than you need))

#SBATCH --output=matlab_job.out ## standard out and standard error goes to this file

## job commands; "simple"" is the name of the MATLAB .m file, specified without the .m extension

module load matlab/r2023b

matlab -singleCompThread -batch "input_arg1='arg1';input_arg2='arg2';input_arg3='arg3';simple"

simple.m

%input_arg1 is set in the submission script that calls this file

%input_arg2 is set in the submission script that calls this file

%input_arg3 is set in the submission script that calls this file

disp(input_arg1)

disp(input_arg2)

disp(input_arg3)

disp('Start Sim')

t0 = tic;

for i = 1:100000

A = rand(1);

end

t = toc(t0);

X = sprintf('Simulation took %f seconds',t);

disp(X)

If both of these files are in the same folder, you can submit this job to Slurm with the command:

$ sbatch example_submit.sh

Submitting multiple single core jobs#

One approach to parallelization with MATLAB is to submit multiple independent MATLAB jobs that each use a single core but take different input parameters such as starting values or data files to process.

Let’s say that we want to submit the exact same single core MATLAB job many times over while only changing the arguments that are passed to simple.m. We can accomplish this through a using a Job Array.

In the example below, the --array option defines the job array, with a specification of the index numbers you want to use for the individual jobs (in this case, 0 through 9). The $SLURM_ARRAY_TASK_ID bash environmental variable will then take on the value of the job array index for each job (so here, integer values 0 through 9, one value for each job).

In this example, we want to run 10 different jobs (indexed 0 through 9) with different input parameters. We create a file with the input parameters for each job on a different line:

input_arguments.txt

job1_arg1 job1_arg2 job1_arg3

job2_arg1 job2_arg2 job2_arg3

job3_arg1 job3_arg2 job3_arg3

job4_arg1 job4_arg2 job4_arg3

job5_arg1 job5_arg2 job5_arg3

job6_arg1 job6_arg2 job6_arg3

job7_arg1 job7_arg2 job7_arg3

job8_arg1 job8_arg2 job8_arg3

job9_arg1 job9_arg2 job9_arg3

job10_arg1 job10_arg2 job10_arg3

Then we can read in the input parameters one line at a time into an input_args bash array in the Slurm submission script and use the value of $SLURM_ARRAY_TASK_ID to select the correct input arguments for each job. Note that the submission script still uses -singleCompThread when starting MATLAB, as each job is run independently on a single core

example_submit.sh

#!/bin/bash

#SBATCH --account=w10001 ## YOUR SLURM ACCOUNT pXXXX or bXXXX

#SBATCH --partition=w10001 ### PARTITION (buyin, short, normal, w10001, etc)

#SBATCH --array=0-9 ## number of jobs to run "in parallel"

#SBATCH --nodes=1 ## Never need to change this

#SBATCH --ntasks-per-node=1 ## Never need to change this

#SBATCH --time=15:00:00 ## how long does this need to run (remember different partitions have restrictions on this param)

#SBATCH --mem=8G ## how much RAM do you need per computer (this effects your FairShare score so be careful to not ask for more than you need))

#SBATCH --job-name="sample_job_\$" ## use the task id in the name of the job

#SBATCH --output=sample_job.%A_%a.out ## use the jobid (A) and the specific job index (a) to name your log file

# read in each row of the input_arguments text file into an array called input_args

IFS=$'\n' read -d '' -r -a input_arguments < input_arguments.txt

# Use the SLURM_ARRAY_TASK_ID varaible to select the correct index from input_arguments and then split the string by whatever delimiter you chose (in our case each input argument is split by a space)

IFS=' ' read -r -a input_args <<< "$"

# Pass the input arguments associated with this SLURM_ARRAY_TASK_ID to your function.

## job commands; job_array is the MATLAB .m file, specified without the .m extension

module load matlab/r2023b

matlab -singleCompThread -batch "input_arg1='$';simple"

If input_arguments.txt, example_submit.sh, and simple.m (shown in the section above) are in the same folder, you can run submit the job with the command sbatch example_submit.sh

Multithreading/Multiple Cores#

MATLAB has built-in multithreading for some linear algebra and numerical functions. By default, MATLAB will try to use all of the cores on a node to perform these computations. However, if a job you’ve submitted to Quest uses more cores than were requested in the Slurm submission script, the job will be terminated. If you want MATLAB to be able to use multiple cores for these calculations, then you can omit the -singleCompThread option when starting MATLAB and add the following line to the beginning of your main .m script.

maxNumCompThreads(str2num(getenv('SLURM_NPROCS')));

Adding this line to your .m script is to be used in combination with the following options below in your submission script or sbatch command:

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=<numberofcores>

Example

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=8

This will allow MATLAB to spawn 8 threads which is equal to the 8 cores requested and received from the scheduler.

Note: Setting the maximum number of computational threads using maxNumCompThreads does not propagate to your next MATLAB session; if you are using MATLAB interactively, you must do this every time you start a new MATLAB session.

Example Evaluation#

Whether using multiple cores will speed up your job depends on the specific MATLAB functions you are using and whether the overhead of splitting the work across multiple cores is worth the gain from running calculations in parallel.

Below, we show two examples:

a MATLAB program that does benefit from utilizing MATLAB’s internal multithreading:

threads_help.ma MATLAB program that does not benefit from utilizing MATLAB’s internal multithreading:

threads_do_not_help.m

Both use the svdfunction, which can make use of MATLAB’s auto-multithreading. We run both on a single core (with the -singleCompThread option) and with 8 cores (setting maxNumCompThreads) to compare the total time the jobs take. The Slurm submission scripts are not shown.

threads_help.m

disp('Start Sim')

maxNumCompThreads(str2num(getenv('SLURM_NPROCS')));

t0 = tic;

for i = 5000:5100

z = randn(i);

max(svd(z));

end

t = toc(t0);

X = sprintf('Simulation took %f seconds',t);

disp(X)

Due to the size of the matrices being passed to svd (somewhere between 5000x5000 and 5100x5100), we do find a substantive difference (~5 times faster) when svd is able to parallelize over 8 cores.

1 CPU: Simulation took 6446.807831 seconds

8 CPUs: Simulation took 1233.587702 seconds

Note that the scaling is not 1 to 1 in terms of how much faster the simulations goes and how many cores you give MATLAB access to. In this case, access to 8 cores leads only to a 5X speed up, not 8, because there is overhead in distributing the work across the cores and recombining the results.

threads_do_not_help.m

disp('Start Sim')

maxNumCompThreads(str2num(getenv('SLURM_NPROCS')));

t0 = tic;

for i = 500:510

z = randn(i);

max(svd(z));

end

t = toc(t0);

X = sprintf('Simulation took %f seconds',t);

disp(X)

Due to the size of the matrices being passed to svd (somewhere between 500x500 and 510x510), we do not find a substantive difference (~1.5 time faster) when svd is able to parallelize over 8 cores.

1 CPU: Simulation took 1.044554 seconds

8 CPUs: Simulation took 0.683330 seconds

A final note: it is very likely that if you run the same program on Quest as you do on your laptop in a single core configuration you will find that it will take longer to run on Quest than on your laptop because the automated multithreading is used by default on your laptop. That being said, enabling the multithreading behavior on Quest comes at a cost of needing to request more cores which may or may not being used efficiently by MATLAB depending on the exact functions you call and the size of the data/matrices being operated on as can be seen from the above example.

Multinode Jobs#

To run jobs on Quest that use cores across multiple nodes, you need to create a parallel profile in MATLAB. The initial steps in this section set up a MATLAB cluster configuration for Quest. This maps the MATLAB job arguments to parameters that are used on Quest, such as partition, account, nodes, and cores. The cluster configuration allows the MATLAB instance to submit batch jobs to the Slurm job scheduler on Quest. Once configured, this allows you to easily submit multi-node workflows. The instructions for initial configuration are included in the following sections.

Quest Cluster Profile Configuration#

Log in to Quest with X-forwarding enabled (use the -X option when connecting via ssh or connect with FastX to have X-forwarding enabled by default). After connecting to Quest, launch MATLAB from the login node from your home directory:

$ module load matlab/r2023b

$ matlab

We will configure MATLAB to run parallel jobs on Quest by calling configCluster. configCluster only needs to be called once per version of MATLAB to create a configuration file for that version, which is saved in your home directory. Please be aware that running configCluster more than once per version will reset your cluster profile back to default settings and erase any saved modifications to the profile.

Within MATLAB run:

>> rehash toolboxcache

>> configCluster

Jobs will now default to the cluster rather than submit to the local machine.

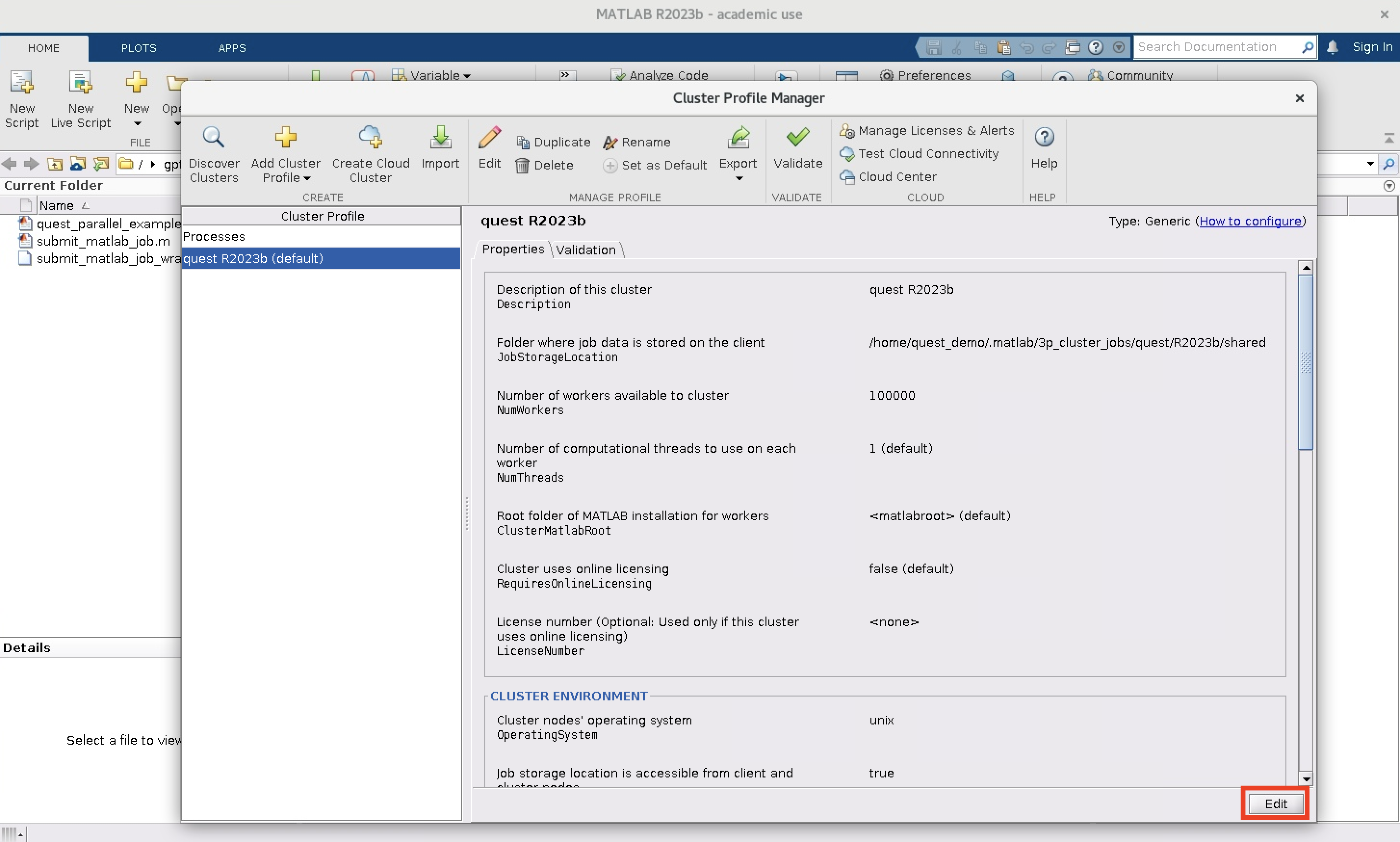

Although the Cluster Profile created by configCluster will work without modification, we recommend making a few edits.

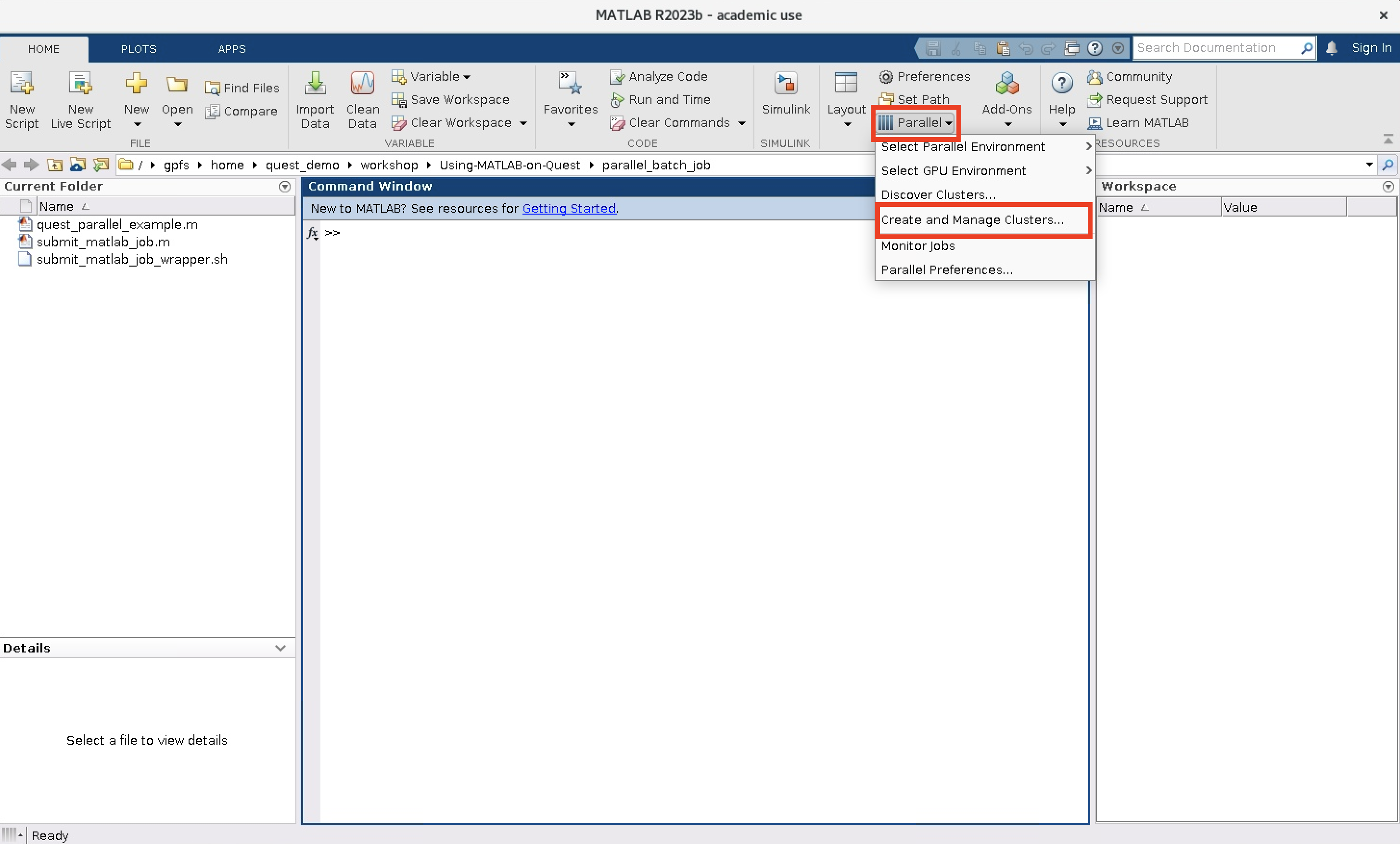

If you are using the MATLAB GUI, you should be able to see and modify the new cluster profile by opening it from the menus: Parallel > Create and Manage Clusters.

You should see that the default Cluster Profile is now quest R20XXa/b depending on what version of MATLAB you are using.

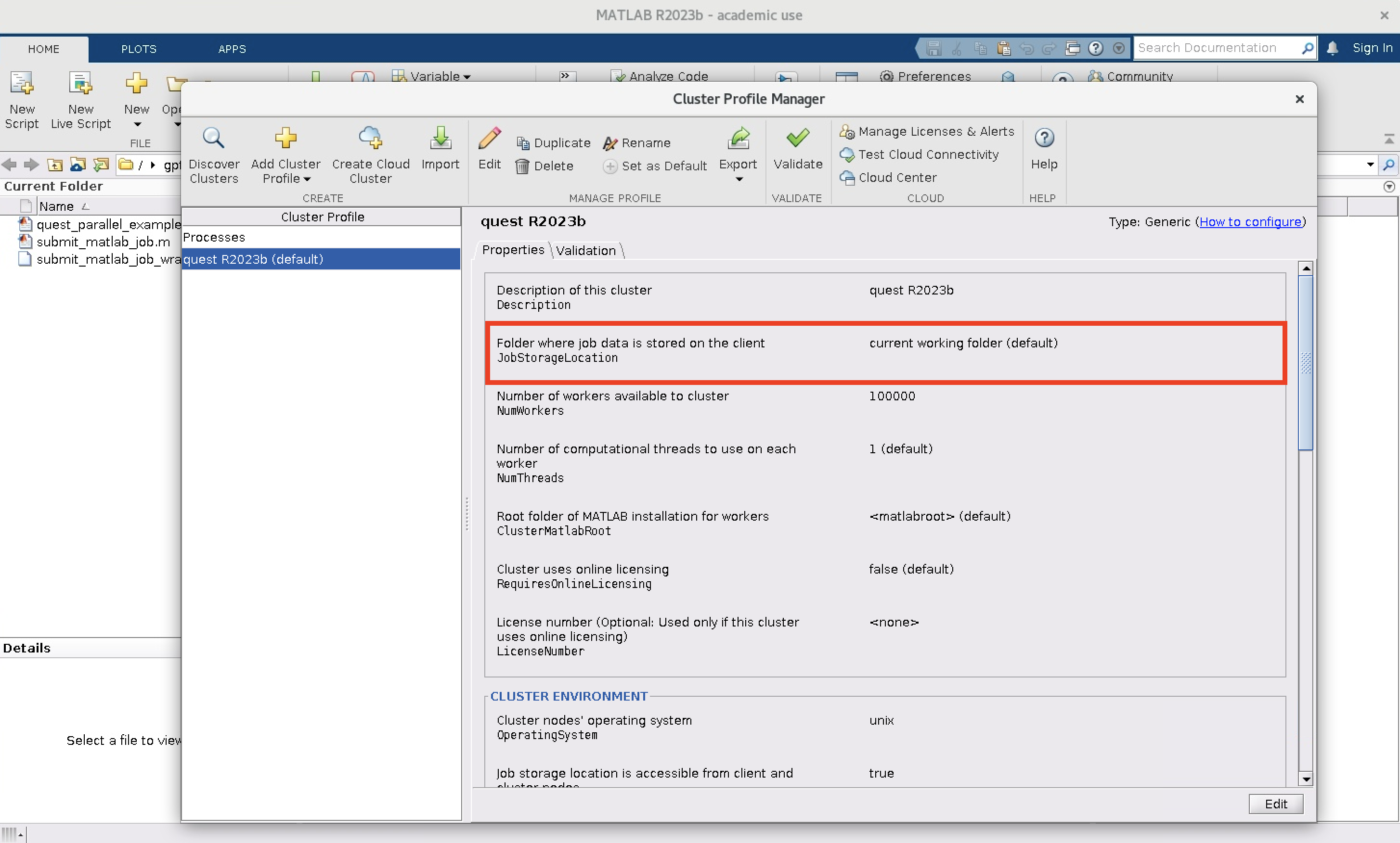

Click Edit and change the JobStorageLocation entry and then click Done. We recommend updating the JobStorageLocation to be the default value of current working directory (which can be achieved by leaving the box blank).

Configuring Cluster Jobs#

Prior to submitting the job, we can specify various parameters to pass to our jobs, such as partition, Slurm account, walltime, etc.

>> % Get a handle to the cluster

>> c = parcluster;

The following options are required in order to submit a MATLAB job to the cluster. Here they are filled in with example values that you should change as appropriate for your job.

>> % Specify the walltime (e.g. 4 hours)

>> c.AdditionalProperties.WallTime = '04:00:00';

>> % Specify an account to use for MATLAB jobs (e.g. pXXXX, bXXXX, etc)

>> c.AdditionalProperties.AccountName = 'p12345';

>> % Specify a partition to use for MATLAB jobs (e.g. short, normal, long)

>> c.AdditionalProperties.QueueName = 'short';

The following arguments are optional but are worth considering when running MATLAB jobs on the cluster

>> % Specify memory to use for MATLAB jobs, per core (default: 4gb)

>> c.AdditionalProperties.MemUsage = '6gb';

>> % Specify number of nodes to use

>> c.AdditionalProperties.Nodes = 1;

>> % Require exclusive node

>> c.AdditionalProperties.RequireExclusiveNode = false;

>> % Specify e-mail address to receive notifications about your job

>> c.AdditionalProperties.EmailAddress = 'user-id@northwestern.edu';

The following arguments are apply when running GPU accelerated MATLAB jobs

>> % Specify number of GPUs

>> c.AdditionalProperties.GpusPerNode = 1;

>> % Specify type of GPU card to use (e.g. a100)

>> c.AdditionalProperties.GpuCard = '';

Save changes after modifying AdditionalProperties for the above changes to persist between MATLAB sessions.

>> c.saveProfile

To see the values of the current configuration options, display AdditionalProperties.

>> % To view current properties

>> c.AdditionalProperties

Unset a value when no longer needed.

>> % Turn off email notifications

>> c.AdditionalProperties.EmailAddress = '';

>> c.saveProfile

Submitting Cluster Jobs#

Users can submit parallel workflows with the batch command either with or without the MATLAB GUI.

MATLAB GUI#

Let’s use the following example for a parallel job, which is saved as quest_parallel_example.m. This script provides an example of how you can submit a multi-core MATLAB job on Quest. This is impactful because it allows you to use the parfor functionality to split a MATLAB task across multiple CPU cores.

quest_parallel_example.m

disp('Start Sim')

iter = 100000;

t0 = tic;

parfor idx = 1:iter

A(idx) = idx;

end

t = toc(t0);

X = sprintf('Simulation took %f seconds',t);

disp(X)

save RESULTS A t

To run this script with the cluster profile we set up above, we will make a MATLAB script called submit_matlab_job.m that is similar to a Slurm submission script. Save submit_matlab_job.m in the same directory as quest_parallel_example.m.

submit_matlab_job.m

% Get a handle to the cluster

c = parcluster;

%% Required arguments in order to submit MATLAB job

% Specify the walltime (e.g. 4 hours)

c.AdditionalProperties.WallTime = '01:00:00';

% Specify an account to use for MATLAB jobs (e.g. pXXXX, bXXXX, etc)

c.AdditionalProperties.AccountName = 'w10001';

% Specify a queue/partition to use for MATLAB jobs (e.g. short, normal, long)

c.AdditionalProperties.QueueName = 'w10001';

%% optional arguments but worth considering

% Specify memory to use for MATLAB jobs, per core (default: 4gb)

c.AdditionalProperties.MemUsage = '5gb';

% Specify number of nodes to use

c.AdditionalProperties.Nodes = 1;

% Specify e-mail address to receive notifications about your job

c.AdditionalProperties.EmailAddress = 'quest_demo@northwestern.edu';

% The script that you want to run through Slurm needs to be in the MATLAB PATH

% Here we assume that quest_parallel_example.m lives in the same folder as submit_matlab_job.m

addpath(pwd)

% Finally we will submit the MATLAB script quest_parallel_example to Slurm such that MATLAB

% will request enough resources to run a parallel pool of size 4 (i.e. parallelize over 4 CPUs).,

job = c.batch('quest_parallel_example', 'Pool', 16, 'CurrentFolder', '.');

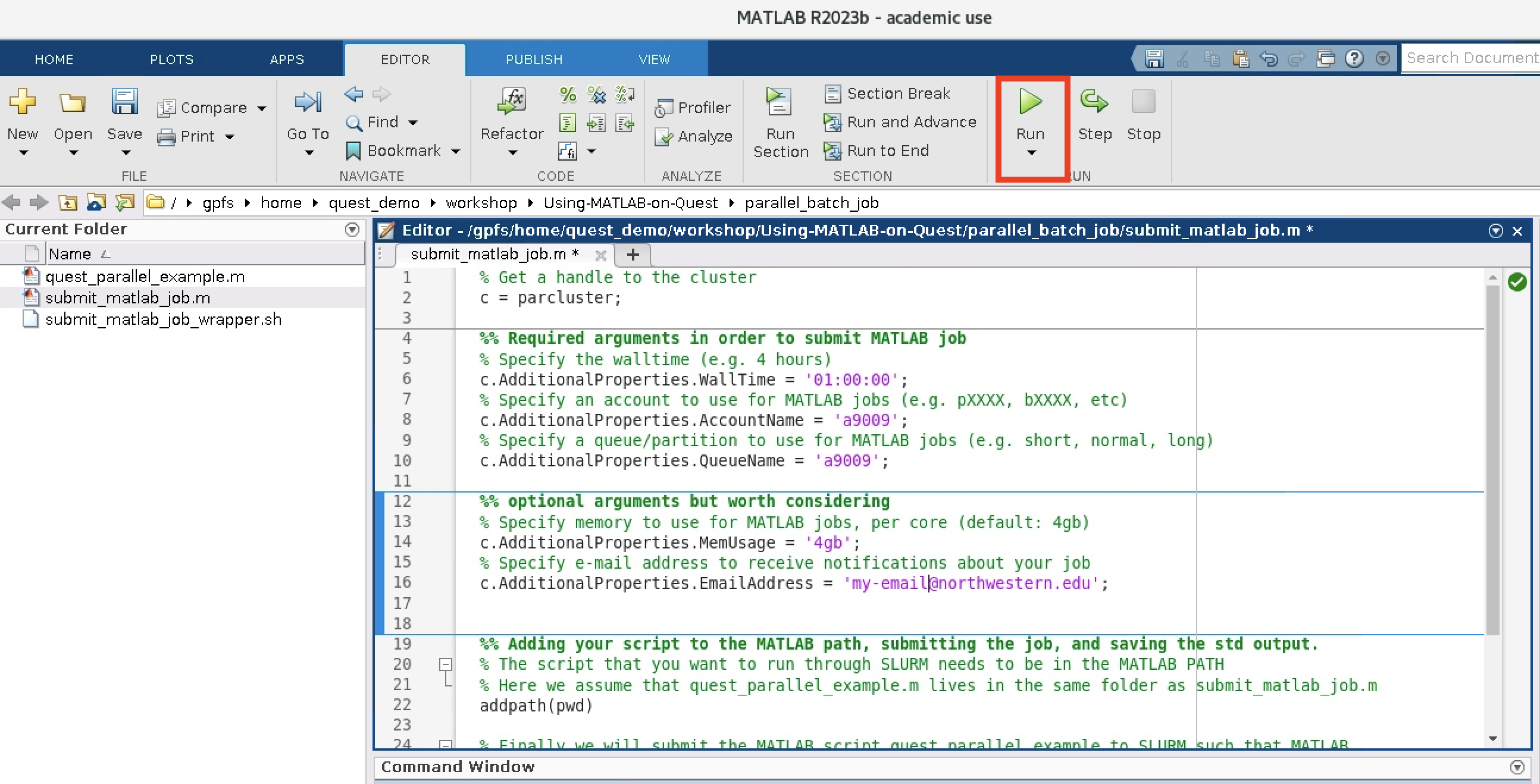

Run submit_matlab_job.m using the Run button in the MATLAB GUI.

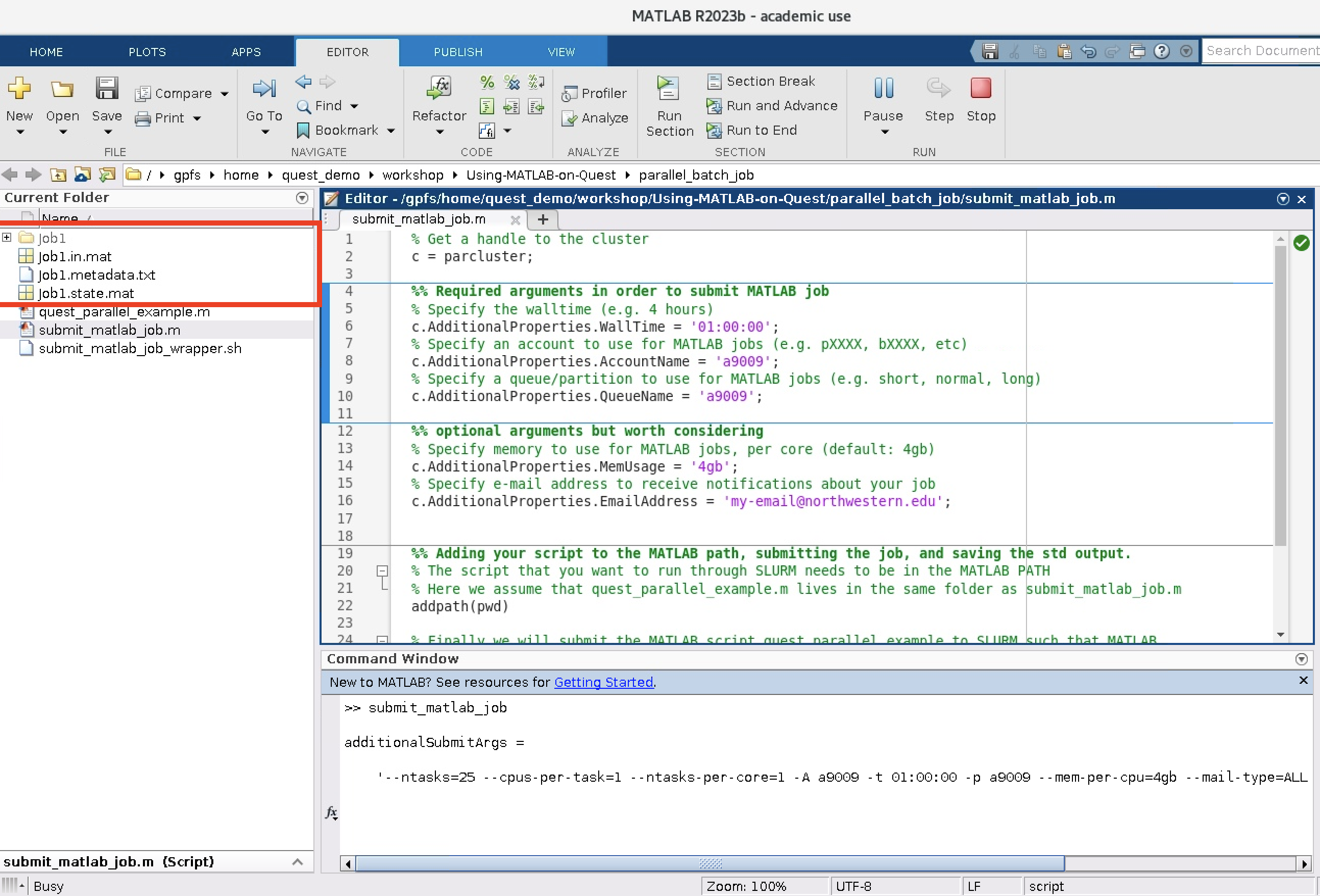

MATLAB should output the arguments that it passes to the sbatch command used to submit a job to Slurm and which are based on the configuration settings in submit_matlab_job.m. Note that the number of tasks submitted is the size of the parallel pool plus one. The extra core is for the root or main MATLAB worker. You should also see a folder called JobX appear in your current working directory as can be seen in the screen shot below. This is the folder where the MATLAB job is running and where any print statements in your code will show up.

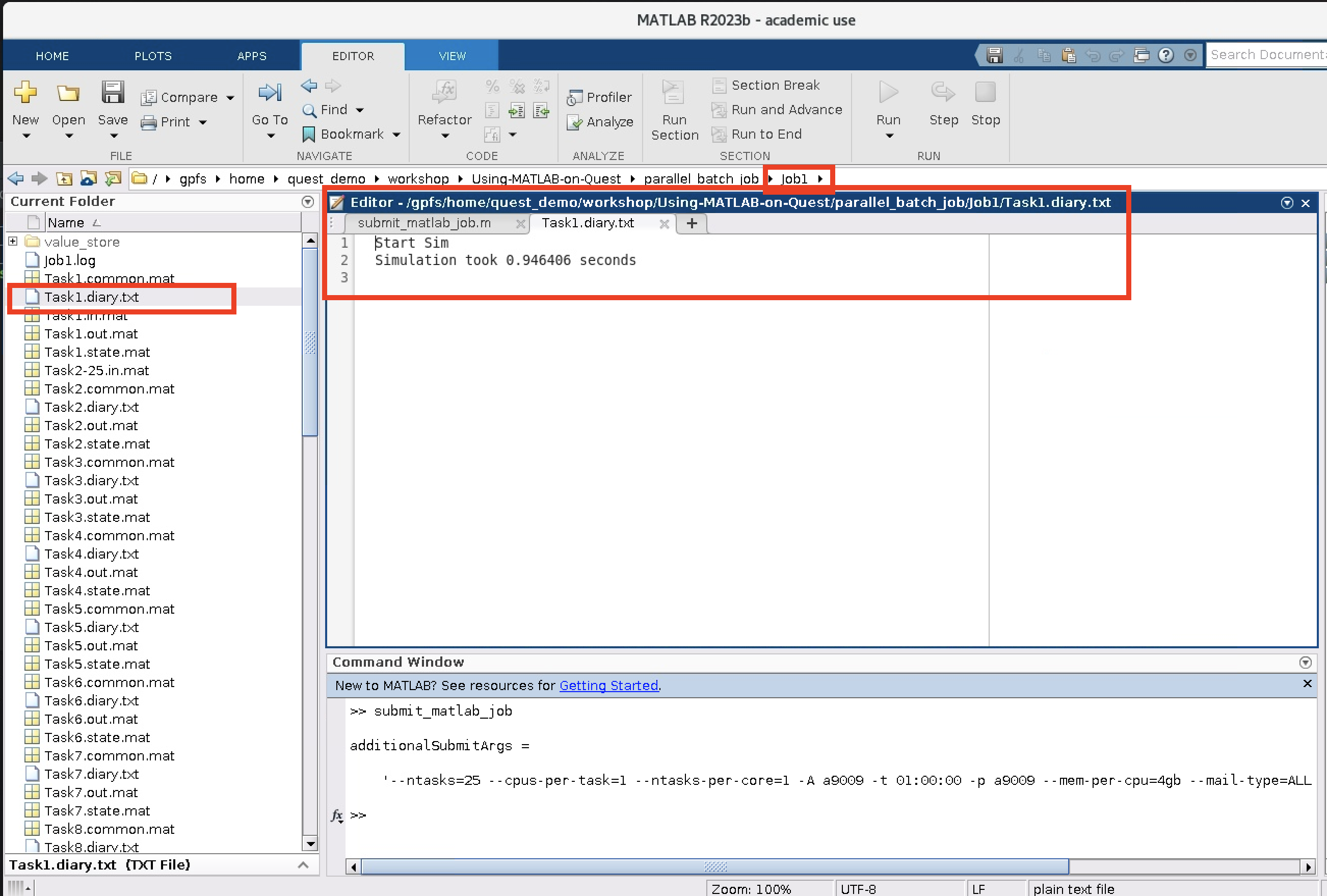

In the Job1 folder, for every MATLAB task and/or worker there is a set of files. The most important file is Task1.diary.txt which is where any print or display statements that you have in your script will go. Here is an example:

Monitor a Cluster Job#

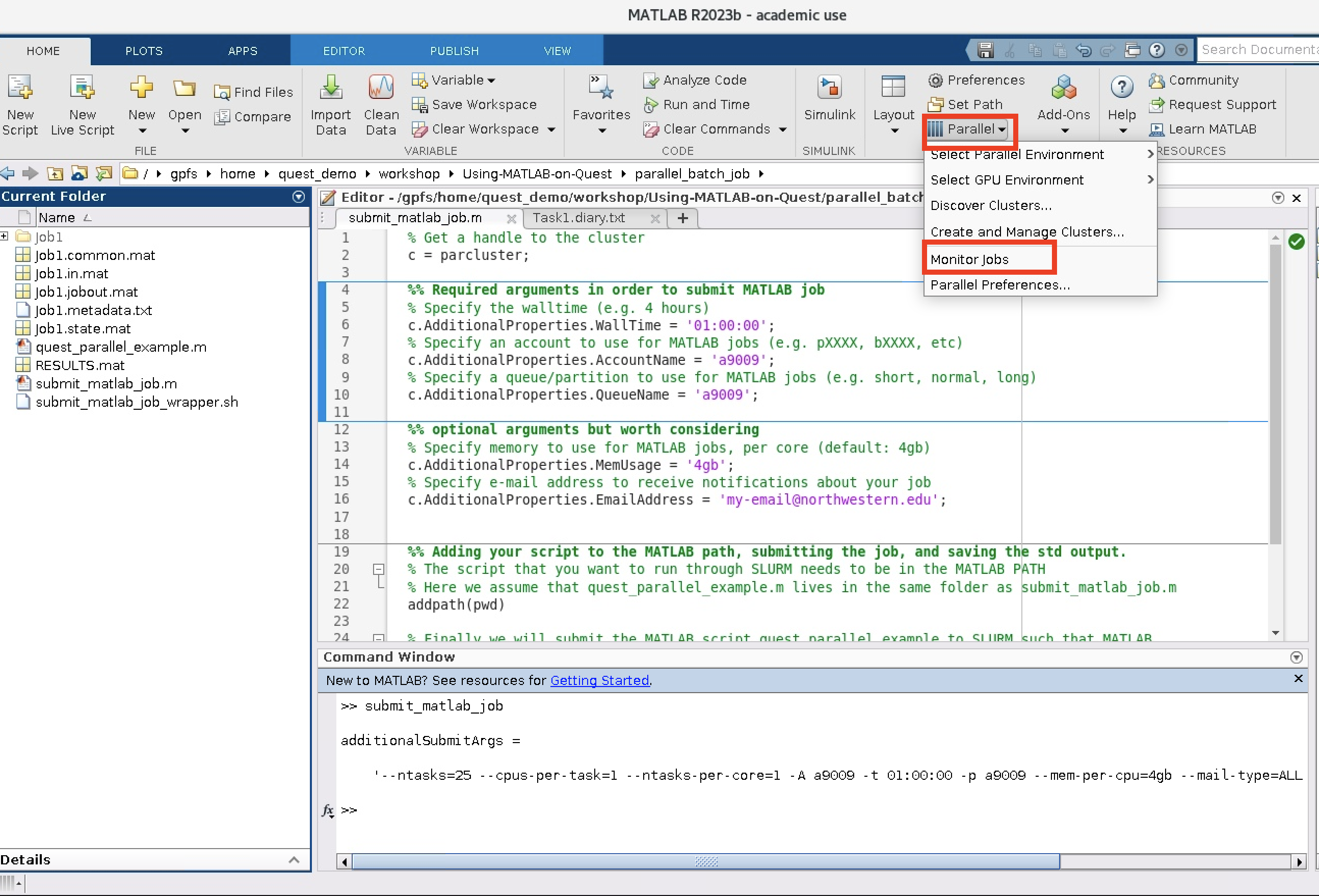

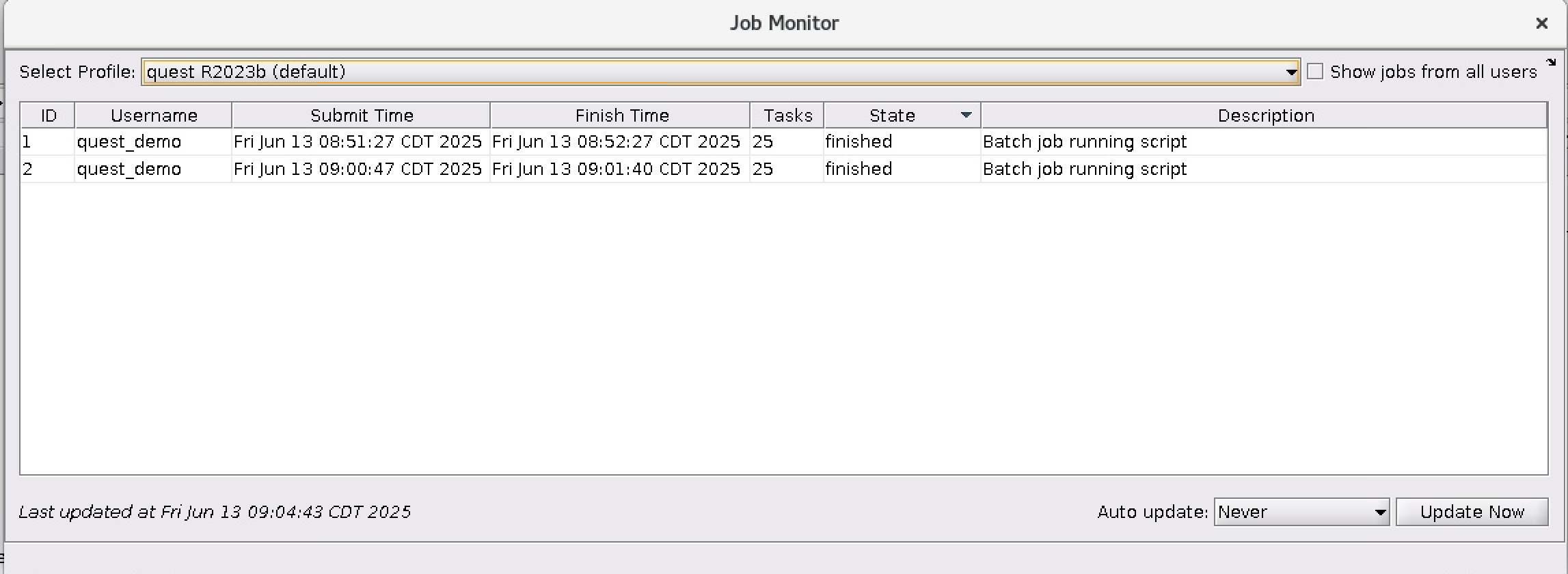

MATLAB provides an application called the Job Monitor to check the status of your MATLAB jobs. To launch Job Monitor from the MATLAB GUI, go to Parallel > Job Monitor.

This will launch a new window containing details related to all parcluster jobs.

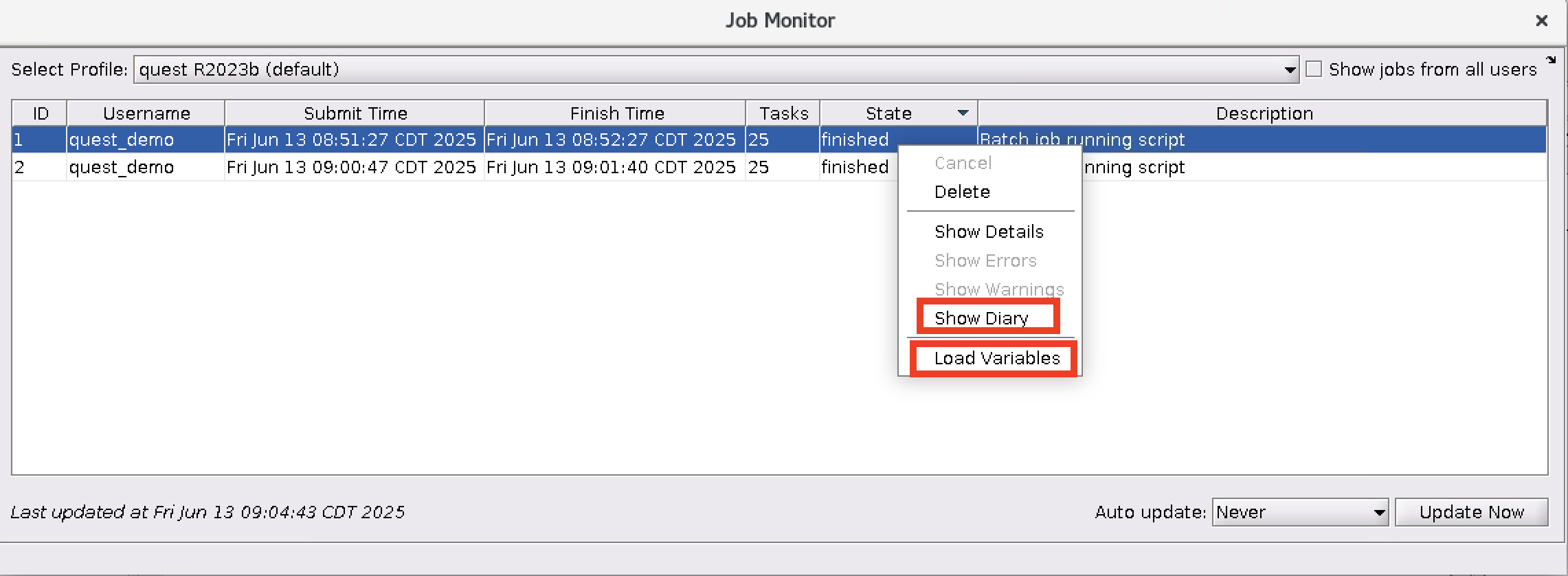

Use the Job Monitor to

Check on the state of your MATLAB job (pending, running, finished)

Display any MATLAB error messages via Show Errors

View the standard output from the job (from print statements like disp, fprintf) via Show Diary

Load all of the variables defined in your program to your current workspace via Load Variables

Command Line#

If you do not plan to use the MATLAB GUI to run submit_matlab_job.m then you will want to create a bash script which will load MATLAB and run MATLAB in command line only mode.

Create a file called submit_matlab_job_wrapper.sh which contains these two lines

submit_matlab_job_wrapper.sh

module load matlab/r2023b

matlab -singleCompThread -batch submit_matlab_job

All that is left to do is to submit the job by running

$ bash submit_matlab_job_wrapper.sh

on the command line. This will take a little while to run but you will know when MATLAB has submitted a job to Slurm when it outputs the sbatch command that it ran based on the configuration settings in submit_matlab_job.m. For example, the above MATLAB submission script would produce this output:

$ bash submit_matlab_job_wrapper.sh

additionalSubmitArgs =

'--ntasks=17 --cpus-per-task=1 --ntasks-per-core=1 -A w10001 -t 01:00:00 -p w10001 -N 1 --mem-per-cpu=5gb --mail-type=ALL --mail-user=quest_demo@northwestern.edu'

Note that the number of tasks submitting is the size of the parallel pool plus one. This extra core is for the root or main MATLAB worker.

GPU Jobs#

Users can submit GPU workflows with the batch command either with or without the MATLAB GUI. Let’s use the following example for a GPU job. The script provides an example of using the eigenvalue (eig) function on a simple array.

quest_gpu_example.m

display(gpuDevice)

A = gpuArray([1 0 1; -1 -2 0; 0 1 -1]);

e = eig(A);

In order to submit the above MATLAB job without the GUI, we will create two additional scripts, one bash script and one MATLAB script.

First, we will make a MATLAB script called submit_matlab_job.m which will look a lot like a GPU Slurm submission script.

submit_matlab_job.m

% Get a handle to the cluster

c = parcluster;

%% Required arguments in order to submit a MATLAB GPU job

% Specify the walltime (e.g. 4 hours)

c.AdditionalProperties.WallTime = '01:00:00';

% Specify an account to use for MATLAB jobs (e.g. pXXXX, bXXXX, etc)

c.AdditionalProperties.AccountName = 'w10001';

% Specify a queue/partition to use for MATLAB jobs (e.g. short, normal, long)

c.AdditionalProperties.QueueName = 'w10001';

% Specify number of GPUs

c.AdditionalProperties.GpusPerNode = 1;

% Specify type of GPU card to use (e.g. a100)

c.AdditionalProperties.GpuCard = 'a100';

%% optional arguments but worth considering

% Specify memory to use for MATLAB jobs, per core (default: 4gb)

c.AdditionalProperties.MemUsage = '5gb';

% Specify number of nodes to use

c.AdditionalProperties.Nodes = 1;

% Specify e-mail address to receive notifications about your job

c.AdditionalProperties.EmailAddress = 'quest_demo@northwestern.edu';

% The script that you want to run through Slurm needs to be in the MATLAB PATH

% Here we assume that quest_gpu_example.m lives in the same folder as submit_matlab_job.m

addpath(pwd)

% Finally we will submit the MATLAB script quest_gpu_example to Slurm such that MATLAB

job = c.batch('quest_gpu_example', 'CurrentFolder', '.');

After you have written your Slurm submission script like MATLAB program, we create a bash script which will simply run this MATLAB script which will submit a job to Slurm.

Create a file called submit_matlab_job_wrapper.sh which contains these two lines

submit_matlab_job_wrapper.sh

module load matlab/r2023b

matlab -singleCompThread -batch submit_matlab_job

All that is left to do is to submit the job by running

$ bash submit_matlab_job_wrapper.sh

on the command line. This will take a little while to run but you will know when MATLAB has submitted a job to Slurm when it outputs the sbatch command that it ran based on the configuration settings in submit_matlab_job.m. For example, the above MATLAB submission script would produce this output:

$ bash submit_matlab_job_wrapper.sh

additionalSubmitArgs =

'--ntasks=1 --cpus-per-task=1 --ntasks-per-core=1 -A w10001 -t 01:00:00 -p w10001 -N 1 --gres=gpu:a100:1 --mem-per-cpu=5gb --mail-type=ALL --mail-user=quest_demo@northwestern.edu'

Note the line --gres=gpu:a100:1 which let’s you know that your have correctly requested for this job to run on a GPU resource.

GPU-Accelerated MATLAB#

In order to leverage both the A100 and H100 GPU cards, please use MATLAB R2023a or later. MATLAB R2021a through R2022b will work on the A100 cards only and MATLAB older than R2021b will not work on the Quest GPUs. Please see MATLAB GPU Computing for more information on how to use GPUs with MATLAB.

A short Matlab script can be used to verify that MATLAB can see and use the GPU devices on Quest.

#!/bin/bash

#SBATCH --account=<account> ## Required: your Slurm account name, i.e. eXXXX, pXXXX or bXXXX

#SBATCH --partition=<partition> ## Required: buyin, short, normal, long, gengpu, genhimem, etc.

#SBATCH --time=<HH:MM:SS> ## Required: How long will the job need to run? Limits vary by partition

#SBATCH --gres=gpu:1 ## Required: How long will the job need to run? Limits vary by partition

#SBATCH --nodes=<#> ## How many computers/nodes do you need? Usually 1

#SBATCH --ntasks=<#> ## How many CPUs or processors do you need? (default value 1)

#SBATCH --mem=<#G> ## How much RAM do you need per computer/node? G = gigabytes

#SBATCH --job-name=<name> ## Used to identify the job

# clear your environment

module purge

# load any modules needed

module load matlab/r2023b

# List the Avaulable GPU Devices

matlab -batch 'gpuDeviceTable'

Interactive Multinode parpool#

To run an interactive pool job on the cluster that can use cores across multiple nodes, continue to use parpool as you’ve done before.

>> % Get a handle to the cluster

>> c = parcluster;

>> % Open a pool of 12 workers on the cluster

>> pool = c.parpool(12);

This will submit a job to Slurm to secure the cores for the pool.

Once the MATLAB Command Window returns a message that the pool is ready, proceed with your work in MATLAB.

Once you’re done with the pool, delete it.

>> % Delete the pool

>> pool.delete

Debugging Cluster Jobs#

If a serial job produces an error, call the getDebugLog method to view the error log file. When submitting independent jobs, with multiple tasks, specify the task number.

>> c.getDebugLog(job.Tasks(3))

For Pool jobs, only specify the job object.

>> c.getDebugLog(job)

When troubleshooting a job, the cluster admin may request the scheduler ID of the job. This can be derived by calling schedID

>> schedID(job)

ans = 25539

Learn More#

To learn more about the MATLAB Parallel Computing Toolbox, check out these resources: