SDE Projects#

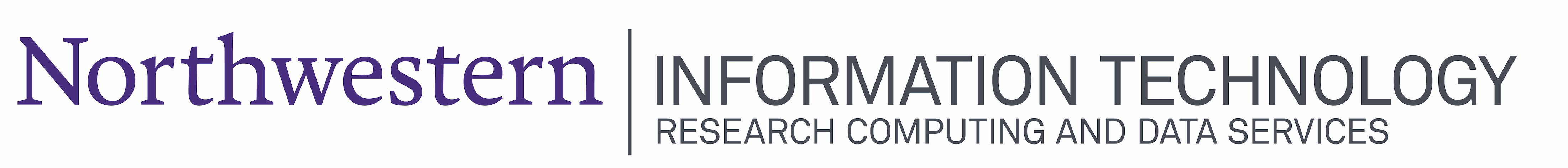

Each SDE environment contains 5 Google Projects . Each project serves a specific purpose and has the necessary resources configured within it. Your role within the SDE (Data Engineer or Researcher/Data Analyst) determines what you have the permission to do within a project.

SDE Organization#

There are 5 projects. Each is designed to support a specific set of operations and tasks.

Data Ingress: Importing data into the SDE environment.

Data Lake: Storing curated and analysis data.

Data Ops: Data engineer cleaning and curation tasks and analysis work.

Workspace Project: Researcher/data analyst data analysis work.

Data Egress: Exporting data from the SDE environment.

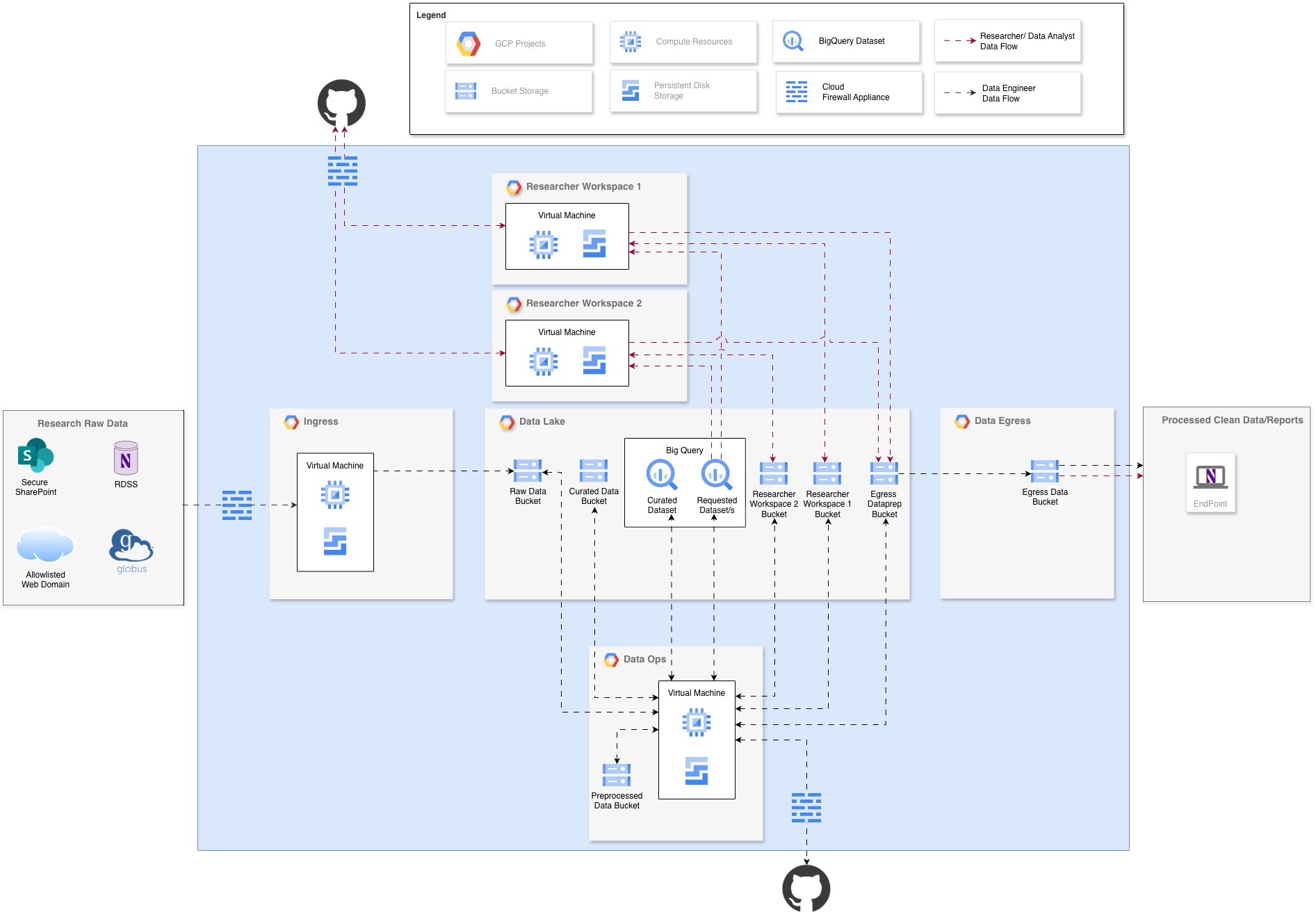

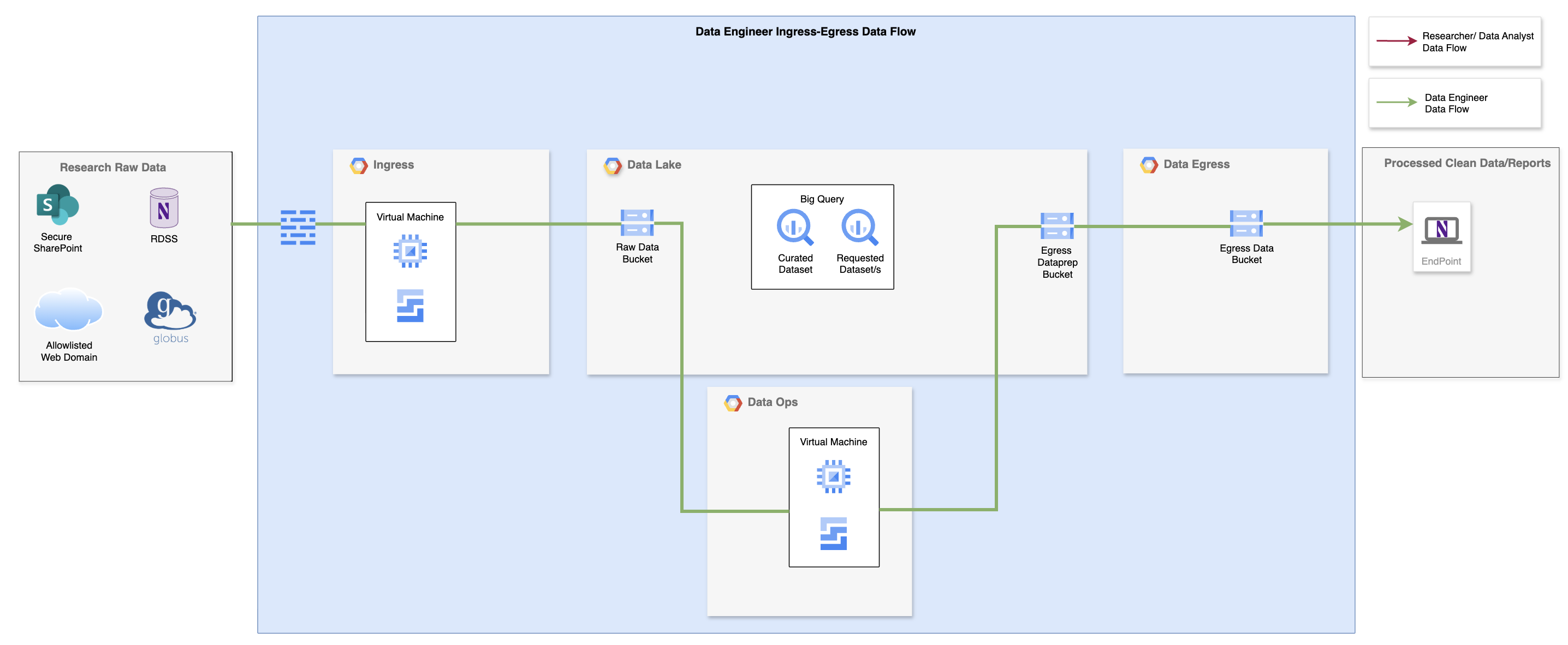

Data Ingress Project#

The Ingress Project serves as the controlled gateway for bringing external data into the platform. Its primary purpose is to provide researchers and data engineers with a secure environment where external files can be accessed, transferred, and ingested into the SDE environment. The project ensures that all requests to connect with outside resources go through the proper approval channels, maintaining compliance with institutional policies. Once data is brought in, it is scanned and monitored to safeguard against security risks, and its movement into the Data Lake Project is tracked for auditing. In this way, the Ingress Project balances accessibility with strong oversight, enabling external data to be introduced safely into the research ecosystem.

Data Engineer#

Access and Resources:

Access to a Virtual Machine (VM) to connect to external data repositories on the allowlist if needed. Data transferred from an approved website can be temporarily stored on the storage attached to the VM.

A storage bucket to support Globus transfers will be added if Globus is requested for the SDE environment.

Project Operations:

Access external data sources and securely transfer files to the virtual environment.

Perform security scans to ensure files are free from malicious code.

Review and verify the data for accuracy and completeness.

Log all ingestion activities for tracking and auditing purposes.

Move data to the “Raw Data” bucket” in the Data Lake Project, either automatically or manually.

Remove temporary files from the virtual environment to maintain storage efficiency.

See Data Ingress Procedures for more details on data ingress processes and requirements.

Researcher/Data Analyst#

Researcher/Data Analyst users have no access to the Ingress project. Researcher/Data Analyst users can access data that has been imported into the SDE environment once a Data Engineer reviews it and adds it to the Data Lake Project.

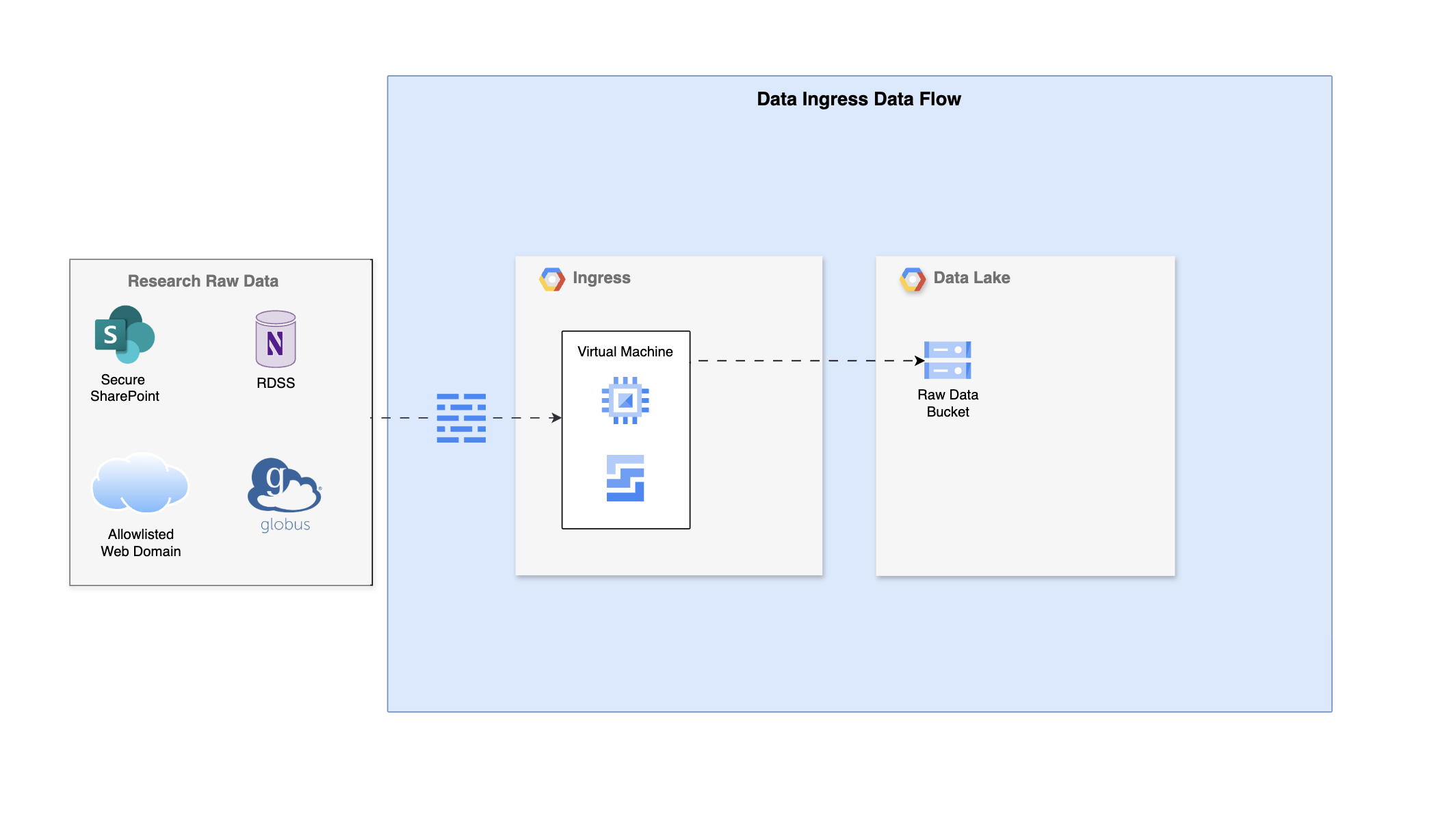

Data Lake Project#

The Data Lake Project is the central place where all data is stored and flows through. It comes with preconfigured storage buckets, each with access set up during the SDE environment setup. Data Engineers manage these buckets and can create new ones as needed. Researcher/Data Analyst users can use the data inside the storage buckets for their analysis work. BigQuery is also available as a data storage option in the Data Lake Project.

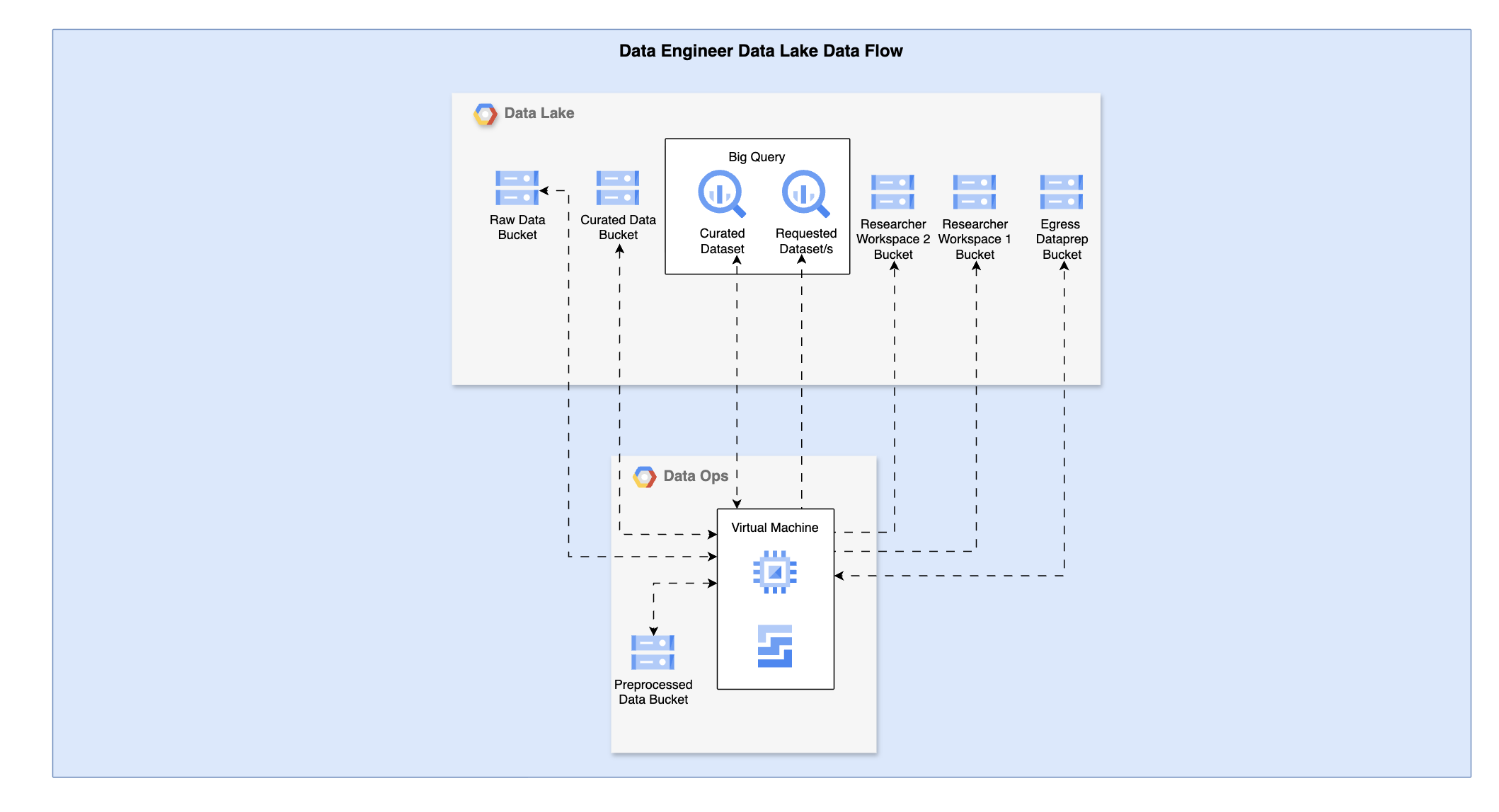

Data Engineer#

Access and Resources:

Can manage all storage buckets in the project, including creating, deleting, and setting permissions. Default buckets include:

Raw Data bucket: For storing original, unprocessed files.

Curated Data bucket: For storing cleaned and processed versions of raw data files.

Researcher Workspace bucket: For storing data files that can be accessed by Researcher/Data Analyst users from VMs in the researcher Workspace Projects.

Egress Dataprep bucket: For Researcher/Data Analysts to stage data for egress from the SDE environment.

Can create and modify BigQuery datasets.

Project Operations:

Manages the movement of data between storage buckets and BigQuery.

Prepares and organizes processed data into BigQuery tables.

Shares access to curated datasets so Researchers/Data Analysts can perform analysis.

Provides curated data to Workspace Project buckets when needed.

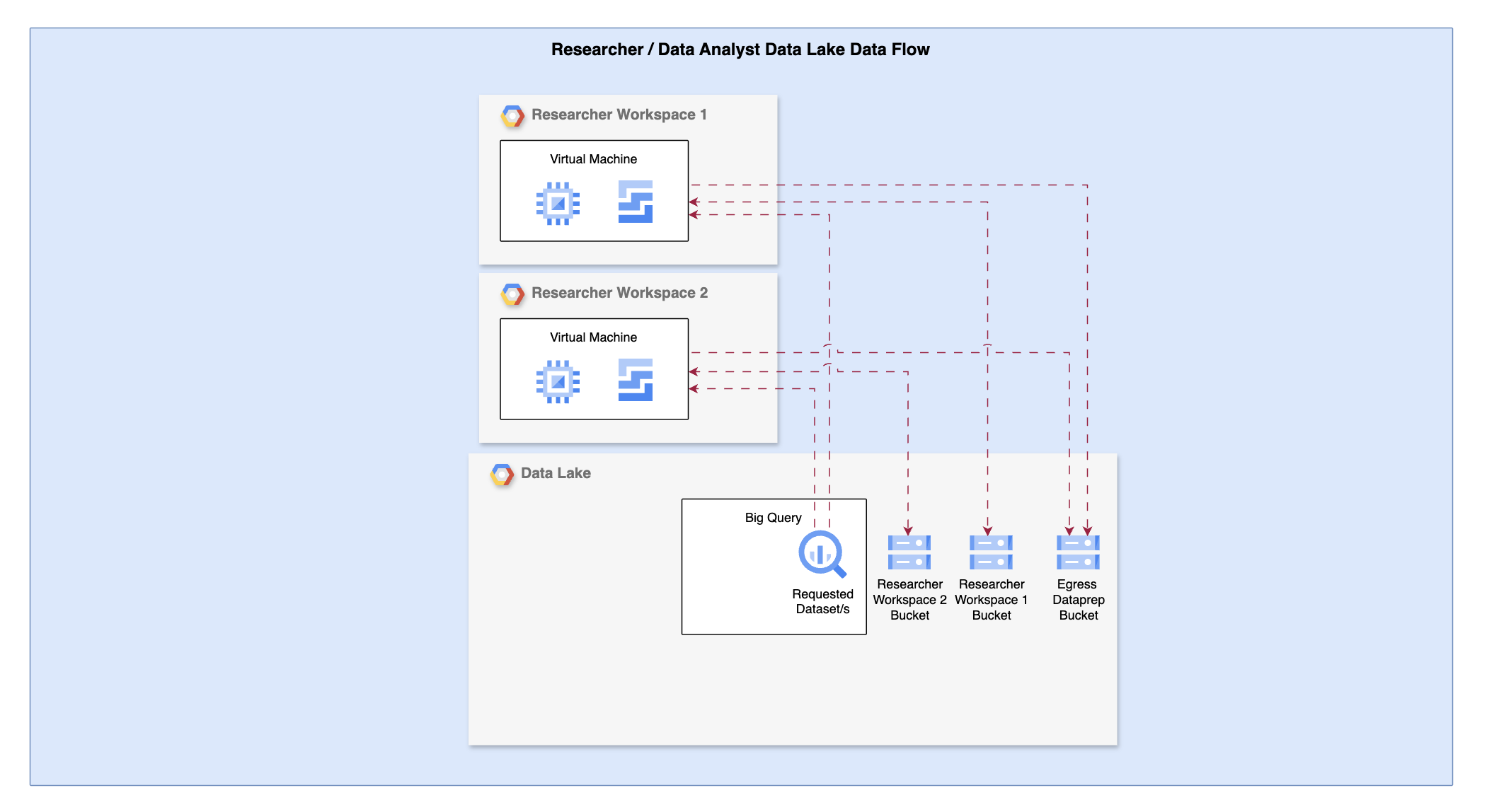

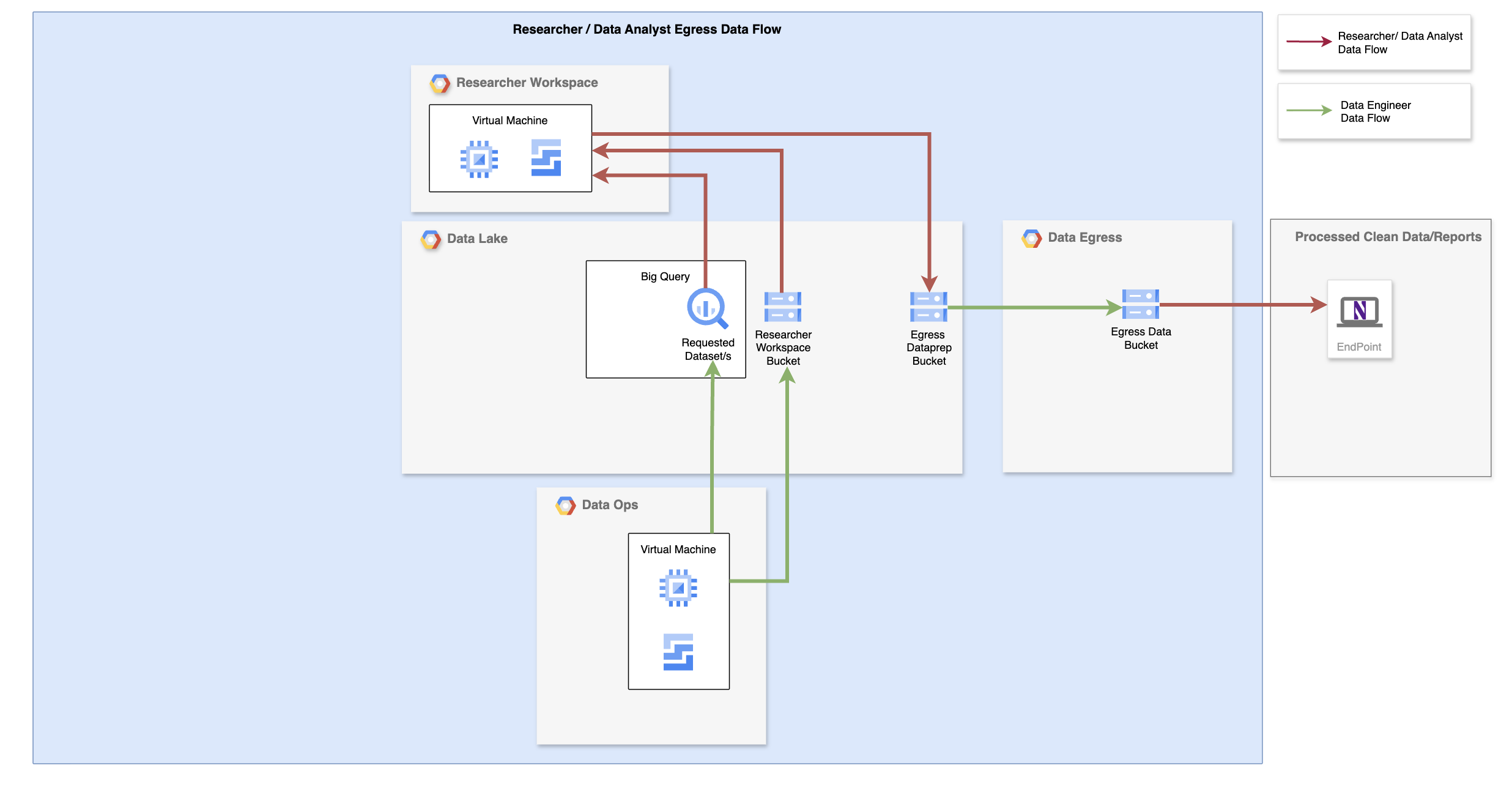

Researcher/Data Analyst#

Access and Resources:

Can manage only the specific storage buckets they need access to.

Can view, list, and add data to the “Egress Dataprep” bucket for review and approval.

Project Operations:

Accesses BigQuery datasets and performs analysis using VMs from the Workspace Project.

Uses the “Researcher Workspace” buckets for storing and managing their own analysis data.

Publishes data to the “Egress Dataprep” bucket when data needs to be downloaded from the SDE environment.

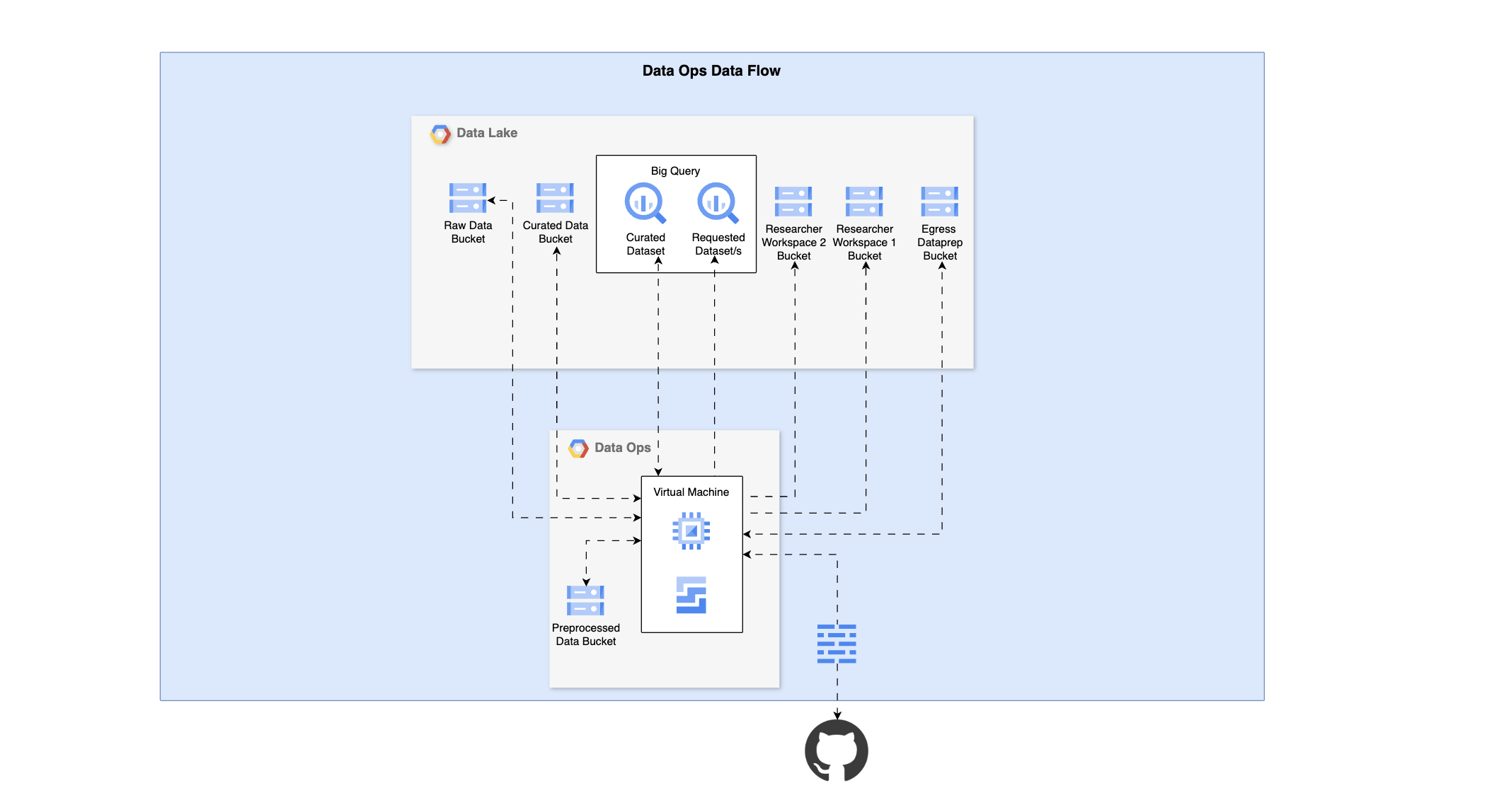

Data Ops Project#

The Data Ops Project is for processing and cleaning data within the SDE environment. It offers Data Engineers a VM equipped with analytical and statistical software, data transfer tools, and automated security scanning. The project also includes dedicated storage buckets for intermediate files and supports direct access to Data Lake buckets and BigQuery tables. Within this project, Data Engineers can perform transformations, organize datasets, and create new BigQuery tables to prepare data for analysis. By combining processing capability with secure storage and connectivity, the Data Ops Project functions as the core workspace for preparing raw data into usable, structured resources.

Data Engineer#

Access and Resources:

Access to a VM in the project

Access the approved GitHub organization to push/pull code from the VM web browser or command line

Each Enclave comes designed with a single Data Ops Project. Multiple Data Engineers can utilize the same VM via concurrent-use. Additional VMs can be added to the project if required; contact Northwestern IT to discuss infrastructure changes to your SDE environment.

Access to a “Preprocessed Data” storage bucket. Data Engineers can create new storage buckets in the project if needed.

Project Operations:

Data Ops is primarily for Data Engineers to process and curate data. Data Engineers should also use the Data Ops project for any analytical work.

Data Engineers can move data between the Data Lake Project and Data Ops Project for processing.

Working datasets can be stored within buckets available in the Data Ops Project before being moved back to the Data Lake Project.

Engineers have access to Google Cloud Functions to transform data and create BigQuery tables if preferred.

A Firewall Appliance performs security checks and scans all incoming data to maintain integrity and safety.

Researcher/Data Analyst#

Researcher/Data Analyst users have no access to the Data Ops project. Researcher/Data Analyst users can access data that has been imported into the SDE environment once a Data Engineer reviews it and adds it to the Data Lake Project. Researcher/Data Analysts can use the Workspace Project for data analysis work.

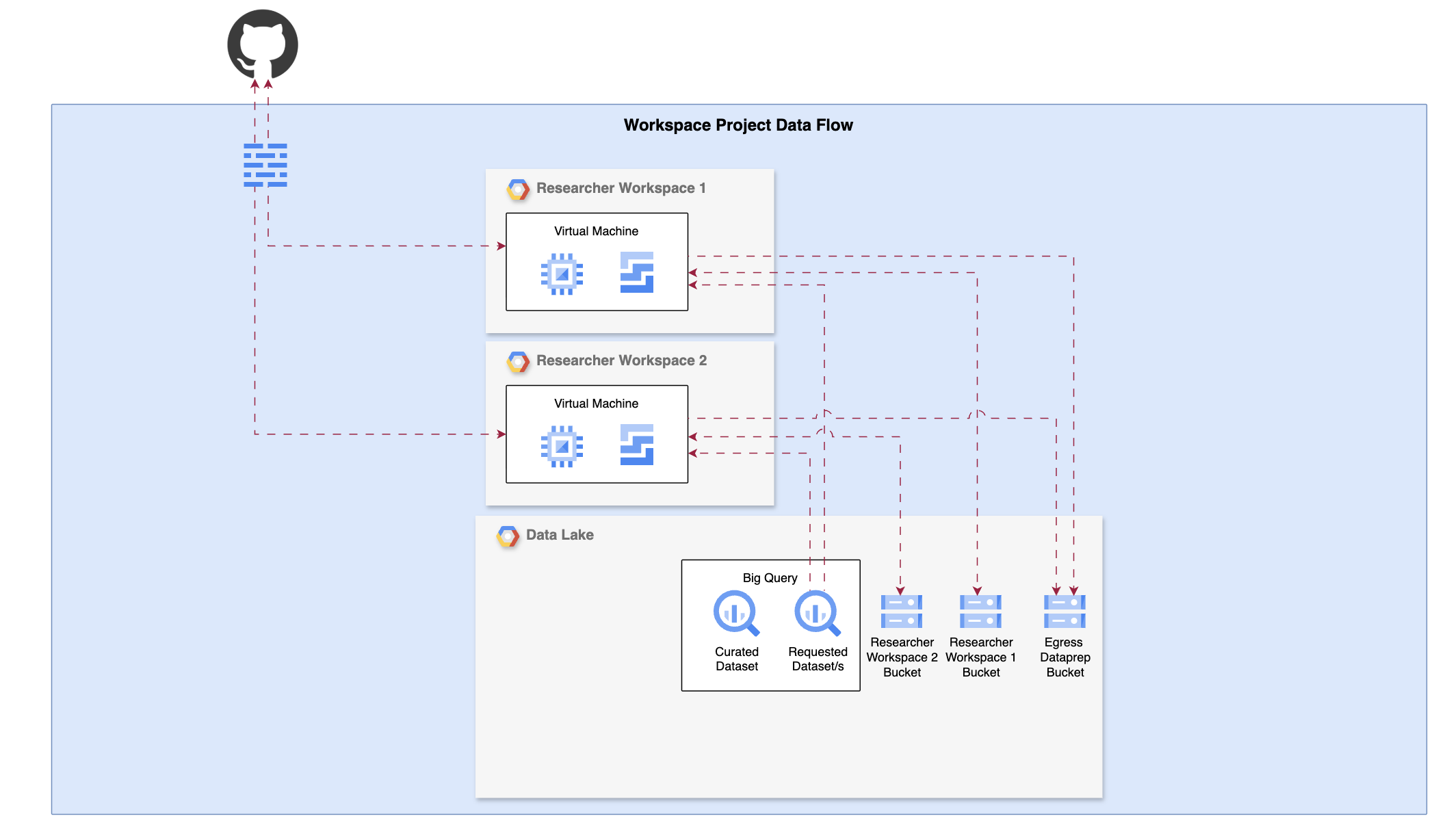

Workspace Project#

Workspace Projects are for researchers and data analysts to access, analyze, and produce insights from curated datasets. Users connect to a VM equipped with analytical, statistical, and data processing software. The project supports secure access to storage buckets and BigQuery tables in the Data Lake Project and allows users to pull or push code from the approved GitHub organization to support their analyses.

Data Engineer#

Data Engineers do not have access to Workspace Projects. They should use the Data Ops Project for any analytical work.

Researcher/Data Analyst#

There may be more than one Workspace Project in an SDE environment. Multiple Workspace Projects can be created when groups of Researcher/Data Analyst users of an SDE environment require different permissions to files or independent computational resources. Setup of researcher Workspace Projects is done through requests to Northwestern IT.

Access and Resources

Access to a VM in the project

Access to storage buckets and BigQuery tables in the Data Lake Project. There are no storage buckets in the Workspace Projects.

Access the approved GitHub organization to push/pull code from the VM web browser or command line

No Internet Access

Project Operations:

Workspaces are designed for data analysts to explore and analyze curated datasets, not for raw data processing.

Data and files can be stored in the dedicated storage buckets assigned specifically to each user in the Data Lake Project.

Users may pull code from Enterprise GitHub using the VM browser or command line.

Automated security scans (Firewall Appliance) check all incoming files for malicious code.

When data needs to leave the SDE, data should be moved to the “Egress Dataprep” bucket in the Data Lake Project. Data Analysts cannot egress data themselves; they need to request data egress from a Data Engineer.

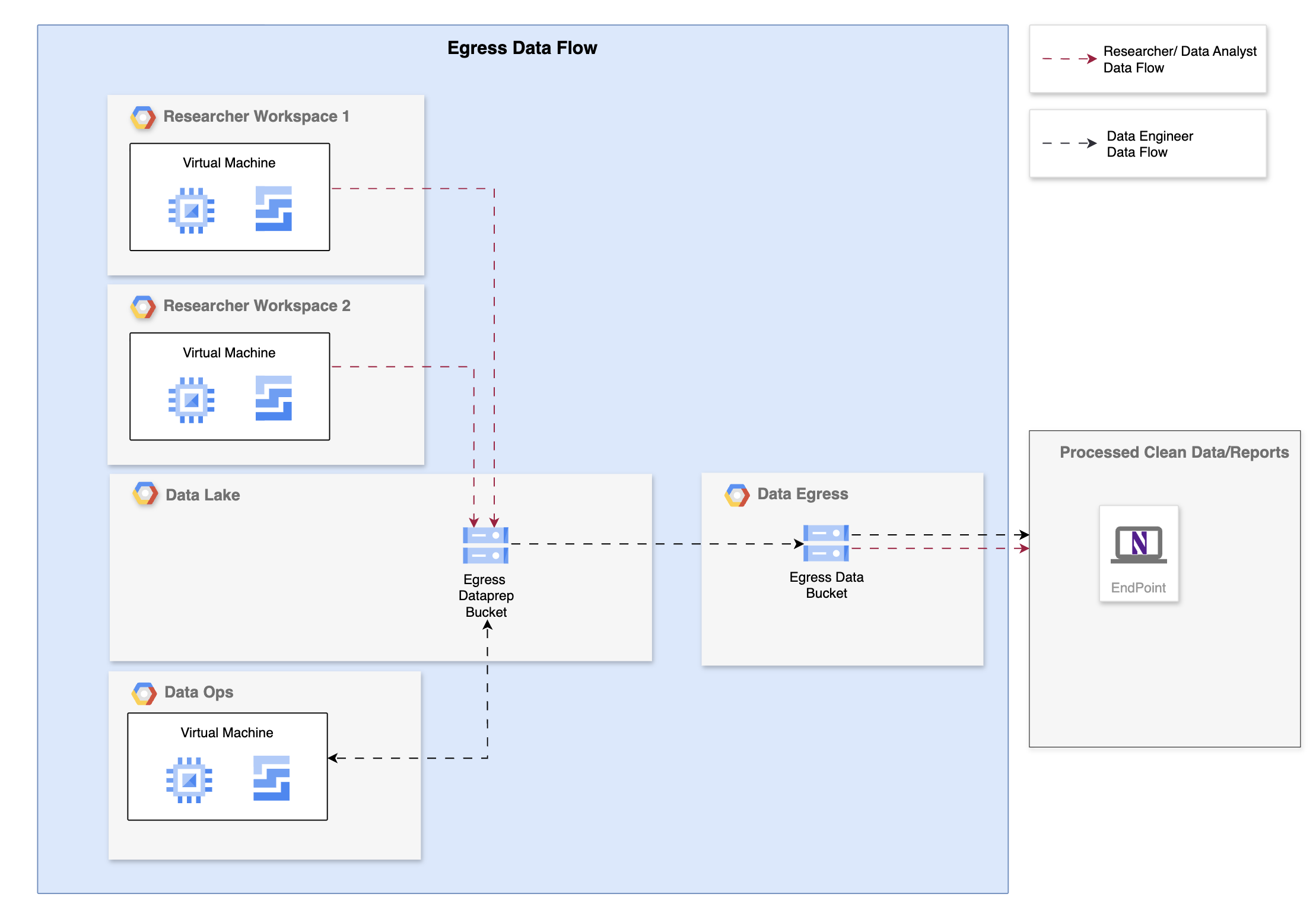

Data Egress Project#

The Egress Project is a secure landing zone for data leaving the SDE. Each SDE environment comes with a single Egress Project. Both Data Engineers and Researchers/Data Analysts have access to this environment, but the levels of access vary. The Egress Project supports the use of proper controls and approval processes for data egress.

Data Engineer#

Access and Resources

Create and assign permissions to storage buckets

Move data into the Egress Project from the Data Lake Project, specifically from the “Egress Dataprep” bucket.

Project Operations:

Manage data egress procedures

Download approved data to their managed endpoint

Separate buckets are created for each egress request, with specific access controls to maintain security.

A log of all approved and egressed data should be maintained by the Data Engineer to track data movements.

Once data is egressed, staged copies in the Data Lake are removed to maintain security.

All egress operations require prior approval and adherence to enterprise data handling policies.

Researcher/Data Analyst#

Access and Resources

View/download access to storage buckets, as managed by a Data Engineer

Project Operations:

Download approved data to their managed endpoint. See data egress procedures for further details.